Data Lakehouse is a unified data platform solution built on open source and focuses on all data disciplines

Artificial intelligence may have already become a regular part of your everyday life. But with increased demands for data security and massive amounts of data, many organisations are struggling to balance the need for flexibility and scalability. Are you dreaming of adopting future data disciplines and utilising AI in your open source business strategy? Then switching to a Data Lakehouse could be a good move.

What is a Data Lakehouse?

A Data Lakehouse is a comprehensive solution that consolidates Data Warehouse and Data Lake into one platform. But what are the differences? And what are the advantages and disadvantages of each?

Data Warehouse

A traditional data warehouse offers structured data management, but it can often be a significantly more expensive and inflexible solution.

Data Lake

A Data Lake approach provides cost-effective data storage, but can often lack governance and performance.

Data Lakehouse

A Data Lakehouse brings together the best of the Data Warehouse and Data Lake into a unified data platform that focuses on data management, governance and all data disciplines to enable the active use of AI in your data and business strategy.

8 benefits of a Data Lakehouse solution

The main benefits of a Data Lakehouse are:

- Inexpensive data storage for all types of data

- Robust data governance

- Use of open data formats

- Support for all data disciplines such as; Generative AI and LLM's.

- Almost unlimited scalability of data storage and compute power

- Consolidation of data silos into a unified platform

- Reduction of corporate technical debt.

- Decoupling between data storage and compute layer, increasing performance.

Separating performance and data storage for greater flexibility and scalability

In Data Lakehouse, performance and data storage are separated. In the past, data and data platforms have been dependent on the underlying storage infrastructure. This meant that if you needed more performance, you had to upgrade your storage and vice versa.

When performance and data storage are separated, you get a solution where you're not locked into one large and expensive platform. Instead, you get a flexible and scalable solution where you only pay for what you use.

This provides the following benefits :

- Cheap data storage: 1 Terabyte per month costs approx. 150 DKK.

- Cheap clusters: 14 GB Memory, 4 cores per hour costs approx. 25 DKK.

- Multiple clusters that do not interfere with each other's jobs and development

- Almost unlimited possibilities for scaling up data storage or your clusters' memory and cores.

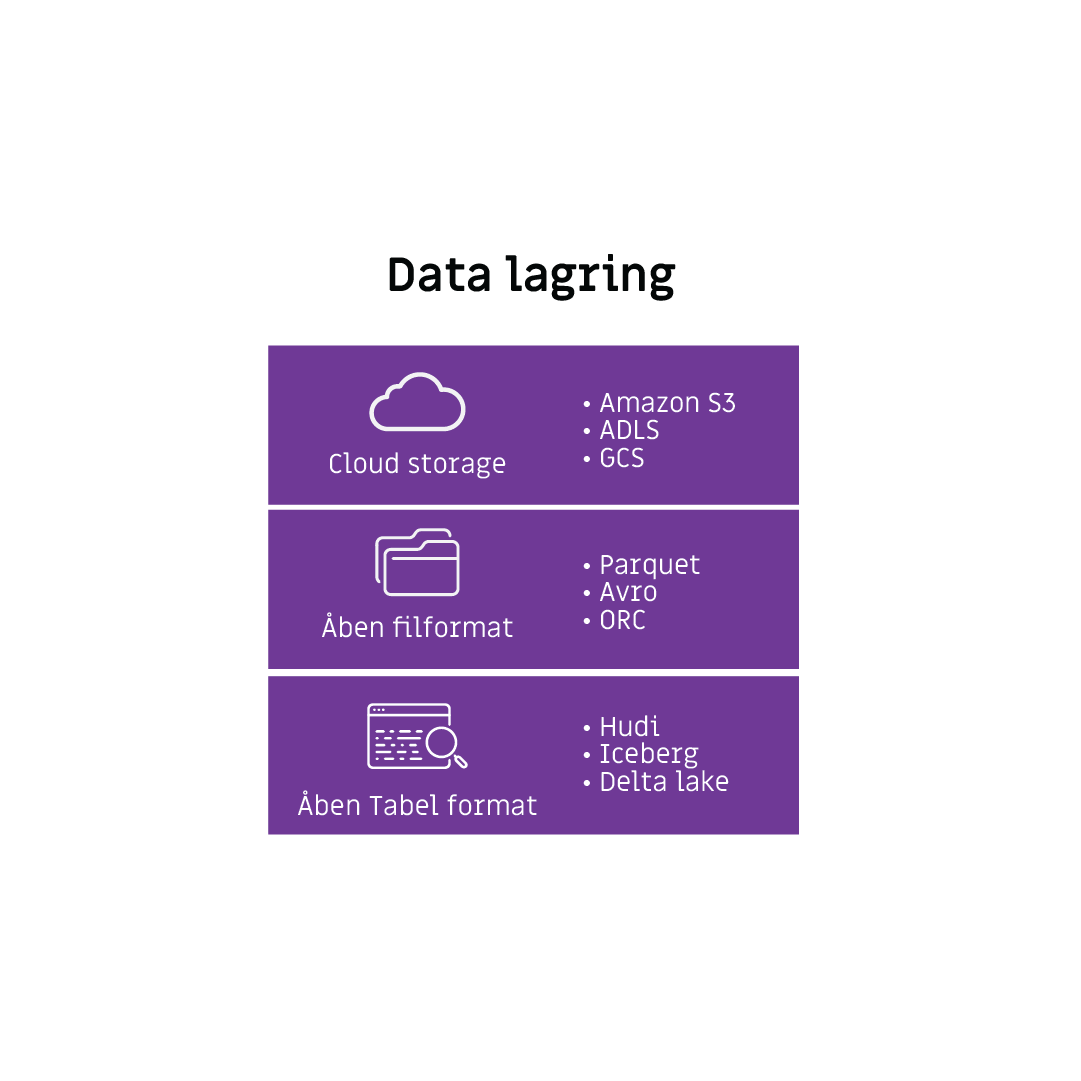

An open and standardised format without limitations

A Data Lakehouse uses open and standardised formats. This means that data can be used by many different systems and programming languages without limitations.

In addition, the programming language is not locked to a specific software vendor or technology. This makes it easier to work with data across systems and technologies.

For example, the open format allows you to use these different programming languages:

- Python

- =R

- Scala

- SQL

In addition, you can use all data types:

- Structured

- Semi-structured

- Unstructured

This flexibility in both data types and programming languages means that data can not only be stored efficiently, it can also be used in real-time. When data is accessible and uses open formats, it becomes possible to work with advanced technologies like real-time streaming and AI directly in the lakehouse architecture

Data Lakehouse supports real-time streaming and AI

A Data Lakehouse can handle both big data and real-time processing, making it ideal for use by:

- Internet of thing (IoT)

- Generative AI

- Large language models (LLM)

- Agentic AI

- Fraud detection

Data Lakehouse is an obvious platform for the data-driven solutions of the future.

How do you get started with a Data Lakehouse?

Before you can get started implementing a Data Lakehouse, there is a lot of groundwork to do. Of course, we want to help you with that.

First and foremost, our process consists of getting a thorough understanding of your current data platform. Based on this, we can proceed with:

- Analysing your current data architecture and identifying business goals

- Develop a data strategy in collaboration with you

- Design and implement a Data Lakehouse based on your business requirements

- Automating lakehouse setup via Infrastructure as Code (Terraform)

- Integration of AI and generative AI to maximise the value of your data

Want to take your data platform to the next level? Then let's talk about how a Data Lakehouse can give your organisation new capabilities that fit the data disciplines of the future.

Why choose us?

We specialise in on-premises and cloud platforms, helping companies implement tailored Data Lakehouse solutions. Our approach ensures that your organisation gets maximum value from your data.

- We are cloud-agnostic in our approach. That's why we can help you implement your Data Lakehouse regardless of which cloud provider you use. We have deep knowledge of the different cloud providers, such as Databricks, Azure, IBM and AWS

- We can implement state-of-the-art AI and generative AI solutions directly in your data platform

- We create a Data Lakehouse tailored to your needs and built on infrastructure as code.

Frequently asked questions and answers

Tap on the question to get the answer.

-

What is a Data Lakehouse?

A Data Lakehouse is a modern data architecture that combines the benefits of a Data Warehouse and a Data Lake. This means that companies can store and analyse all types of data on one platform without having to move data between different systems.

-

How does a Data Lakehouse differ from a Data Warehouse?

A Data Warehouse is optimal for structured data and BI reporting, but can be expensive to scale and less flexible. A Data Lakehouse retains the governance and performance of a Data Warehouse, but provides the flexibility and scalability of a Data Lake.

-

How does a Data Lakehouse differ from a Data Lake?

A Data Lake makes it easy and affordable to store large amounts of data, but often lacks governance, security and performance for analyses. A data lakehouse adds these elements so organisations can use their data more effectively.

-

What are the benefits of a Data Lakehouse?

- Affordable and flexible data storage

- Strong governance and data security

- Supports both BI, real-time analytics and AI/ML

- Scalable and open architecture without vendor lock-in

- Consolidates data silos into one platform.

-

Which organisations need a Data Lakehouse?

A Data Lakehouse is particularly relevant for companies that:

- Work with large amounts of data and need fast access

- Developing AI and Machine Learning models

- Want real-time analyses

- Want to avoid vendor lock-in and benefit from open data formats

-

How does a Data Lakehouse improve data management and governance?

A data lakehouse has advanced tools for access control, data lineage and compliance. It ensures that data is accessible to the right people without compromising security.

-

Does a Data Lakehouse support Generative AI and Large Language Models (LLMs)?

Yes, a Data Lakehouse is optimised for AI disciplines, including Generative AI and LLMs, as it can handle both structured and unstructured data in large volumes.

-

What are the technical requirements for implementing a Data Lakehouse?

The technical requirements depend on the chosen platform, but are typically required:

- Cloud or on-premise infrastructure with scalable storage capacity

- Support for open data formats such as Parquet and Delta Lake

- Tools for data integration, AI and analytics

-

What are the financial benefits of a Data Lakehouse?

A Data Lakehouse reduces the cost of data storage and processing by supporting scalable and flexible solutions. Organisations can invest in exactly the capacity they need without being locked into expensive proprietary solutions.

-

How do you get started with a Data Lakehouse?

The process for getting started with a Data Lakehouse looks something like this:

- Analysing your current data architecture and business goals

- Creating a data strategy

- Designing and implementing a Data Lakehouse based on your needs

- Automation of setup via Infrastructure as Code (Terraform)

- Integration of AI and Generative AI to maximise business value